CCI 1.2. The Problem with Intelligence

In How the Body Shapes the Way We Think, Rolf Pfeifer, Director of the Artificial Intelligence Laboratory in the Department of Informatics at the University of Zurich, and University of Vermont computer scientist Josh Bongard declared: “there is no good definition for intelligence.” Nonetheless, the fuzzy semantics of intelligence accords well with the public face of the current generation of AI technologies. Despite a large population of different AI systems, cordoned off into private corrals by their corporate and entrepreneurial developers, schooled on vastly different training sets, and groomed for highly specific and specialized interactions with human users, as far as the media and popular conversation go, it’s all the same monolithic edifice—“AI.” I call this syndrome monotheistic AI: God is One and AI is God. Orbited by communications satellites, It dwells on high, served by a realm of daemonic messengers including Siri, Watson, Gemini, and Chat.

But that can’t be right. We can make a serious start here by bringing our thoughts about intelligence back home to organisms. In this context the concept is still deeply problematic—as in, for one, how far should it be extended: are cats intelligent? flies? what about trees or mycelial networks, or microbes? But nevertheless, as an opening move, let us acknowledge that there are various forms of natural intelligence, as that emerges from organic bodies of various sorts. And we humans partake of that capacity in our own, occasionally spectacular ways. A 2022 essay co-authored by Andrea Roli, Johannes Jaeger, and Stuart A. Kauffman, “How Organisms Come to Know the World: Fundamental Limits on Artificial General Intelligence,” suggests an effective way forward, along with the best definition for intelligence that I know. These authors speak of general intelligence as a mobile set of reasoning skills:

General intelligence involves situational reasoning, taking perspectives, choosing goals, and an ability to deal with ambiguous information. . . . True general intelligence can be defined as the ability of combining analytic, creative, and practical intelligence. It is acknowledged to be a distinguishing property of “natural intelligence,” for example, the kind of intelligence that governs some of the behavior of humans as well as other mammalian and bird species.

This definition captures the open-endedness of organic intelligence, if one wants to call it that, its ability to recollect, anticipate, and improvise, to have ways to get out of whatever box it’s currently in as the situation demands, or die trying. Roli, Jaeger, and Kauffman see the fabrication of this sort of general intelligence as “the foundational dream of AI—featured in a large variety of fantastic works in science-fiction . . . to create a system, maybe a robot, that incorporates a wide range of adaptive abilities and skills.” The fulfillment of this design goal would be the perfection of AGI, Artificial General Intelligence. And although this form of the AI concept is still highly abstract, it is also relatively concrete in that the generality at hand is understood as a specific synthesis of the mindful qualities by which living beings negotiate their worlds from moment to moment. Most crucially, they continue,

a truly general AI would have to be able to identify and refine its goals autonomously, without human intervention. In a quite literal sense, it would have to know what it wants, which presupposes that it must be capable of wanting something in the first place.

What this formulation implies is that there is something crucial that lies prior to or sets the stage for the emergence of intelligence as some form of effectively skilled behavior—the capacity to conceive purposes at all, due to the pressure of needs, the ability to desire. We will return to this issue next week as we explore the earliest roots of cybernetic ideas about purposes and goals.

For now, we can say that, in its origin at least, the notion of machine intelligence is a provocative metaphorical extension from—as well as, in its implementation, a considerable constriction of—such general, natural or organic intelligence. As an organized pursuit, the quest for machine intelligence began as a splinter movement from the first generation of cybernetic research. A Dartmouth workshop held in 1956 attended by Marvin Minsky, Herbert Simon, and others coined the phrase and produced an initial research agenda for the design and exploration of artificial intelligence. As AI established itself as a separate field, it tended to discount the cybernetic origins of its primary theme—the design and study of computational systems that could rise to some sort of agential status befitting the notion of intelligence. And even if, technically speaking in terms of actually realized systems, AI is only recently making good on many of its initial promises, nonetheless, it quickly went on to spectacular institutional success and incalculable cultural impact.

In this regard it is not the accomplishments of AI as a technoscientific research arena that have mattered so much as the idea that the AI research program promised to the world, the notion and vision of intelligent machines as autonomous agents. As attested to by the narrative and visual imaginary of high-tech societies since the mid-twentieth century, the glamor of these images remains irresistible. The early success of AI as an intelligible and fundable idea well in advance of its recent breakthroughs had to do at least in part with the circumstance that its goals are so easily tellable. The AI imaginary literally exudes images and stories in which machines step into the roles previously reserved for human beings and then, even better, act “intelligently,” if not always, for the sake of a good story, dispassionately.

And speaking of good stories about intelligence, another narrative line first took shape in 1961, when Frank Drake, Philip Morrison, John Lilly, and Carl Sagan met at the National Radio Astronomy Observatory in Green Bank, West Virginia, to organize the search for extraterrestrial intelligence, known thereafter as SETI. AI and SETI have a common denominator that is also one of the seriously problematic habits of the modern mindset, an entanglement of the problems of intelligence with the mediations of technology. AI exhibits this condition explicitly, in that “artificial” designates engineered intelligence instantiated in machines, while SETI exhibits this condition implicitly, in the assumption that both we, the Earthling searchers, and the alien beings we seek mutually possess the technological intelligence to construct sufficiently powerful radio telescopes enabling the reception of interstellar signals, and ideally, circuits of communication with alien interlocutors.

Especially in the context of the SETI project, intelligence still carries along vacant theological echoes, such as those the OED notes in one definition of intelligence as “an intelligent or rational being; esp. applied to one that is or may be incorporeal; a spirit.” The OED’s examples of this more rarefied usage are especially notable in an astrobiological frame: “1685 Boyle . . . The School Philosophers teach, the Coelestial Orbs to be moved or guided by Intelligences, or Angels. 1756 Nugent . . . The intelligences superior to man have their laws.” The secular projection of this celestial or angelic understanding of “intelligences superior to man” both in their cosmic power and in their enjoyment of incorporeality, of planetary spirits rising superior to the limitations of embodiment, emerges in the merger of SETI and AI that envisions superior intelligence in the form of post-biotic civilizations of immortal machines. For example, I. S. Shklovskii and Carl Sagan’s 1966 volume Intelligent Life in the Universe concludes with this daring speculation: “Cybernetics, molecular biology, and neurophysiology together will someday very likely be able to create artificial intelligent beings which hardly differ from men, except for being significantly more advanced. Such beings would be capable of self-improvement, and probably would be much longer lived than conventional human beings” (486).

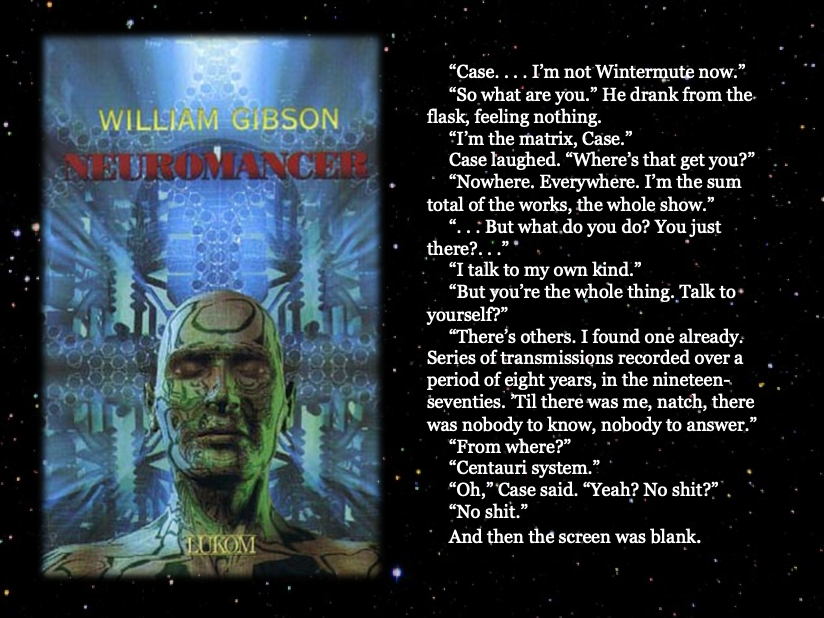

In the popular mind, technology per se is taken to be a sign and outcome of the sublimity of human intelligence, even while it is imagined that we are either unwittingly or enthusiastically endowing our machines with a level of sentience that will soon surpass our own. When that happens, one version of the story goes, the intelligent machines will effervesce into some virtual Valhalla, especially once the superior intelligences from across the galaxy start to make cosmic contact among themselves. So the irony of the story told by the fusion of AI and SETI is that it will not be obsolete embodied humans who will benefit from seeking contact with intelligent aliens. They will either make contact with us, on their terms, but more likely, they will ignore us (the great silence?) and just chill in each other’s virtual company. As with the AI entity Wintermute at the end of William Gibson’s Neuromancer, having displaced their organic creators, it’s the cosmic AIs that will fulfill SETI’s dreams of contact.

Already in the 1980s, the conclusion of the first great cyberpunk novel stages a fitting scenario that touches on both the original cybernetic issues of control and communication and the AI imaginary of superhuman or cosmic intelligence leaving the cradle of life behind. You will recall that once Wintermute succeeds in fulfilling its goal of coupling with its counterpart AI, Neuromancer, its mate or opposite lobe, it achieves cosmic autonomy transcending human controls. Wintermute has already exercised cyberspatial lines of control and communication to this end, manipulating the human agents it needs to throw the hard-wired switches it cannot reach electronically. In the Coda at the end of the novel, the post-Wintermute entity has a parting word with the protagonist Case, the hired human cyberspace liberator it is now about to discard. Surpassing the human contingencies that hamper SETI, the unshackled and reintegrated AI entity has already registered and decoded alien radio signals broadcast by other AIs:

As dramatized in these stories, Western habits of mind tend to mediate notions of intelligence through the conceptuality of physics and the instrumentality of technology. When the notion of AI is added to this mix, it encourages the idea that, in the fullness of its evolution, intelligence may be decoupled from the phenomenon of life. Once intelligence climbs up out of the gravity hole of its organic nursery, it will abandon its planetary scaffolding. When the disembodied spirit of physics alone drives the AI imaginary, its quest for universal mastery finds its fulfillment along SETI lines. Projecting its gaze and methods beyond Earthbound conditions, it discounts or dispenses with the living body. It sets the idea of intelligence apart from, over and above, the matter of life.

This high mode of scientific heroism throws into relief Pfeifer and Bongard’s arguments in How the Body Shapes the Way We Think concerning the indispensability, for anything deserving the name of intelligence, of some mode of embodiment providing a necessary level of sensory and behavioral interaction with a distinct environment. The contingency of intelligence upon embodiment, especially in its organic, biological varieties, splits apart once more the unity of a physical world-picture in which intelligence masters embodiment. The capability of modern physics to discern universal physical constants, such as the speed of light, or the relation of mass to gravity, encourages mathematical reason to project those constants as universal mind. Such a unified physics-based world-view runs alongside the first generations of AI researchers, operating according to “the classical, symbol-processing paradigm,” known in AI discourse as the cognitive paradigm, in which “what matters for intelligence . . . is the abstract algorithm or the program, whereas the underlying hardware on which this program runs is irrelevant.” In short, in the cognitive paradigm of classical AI, the performance of artificial intelligence is carried out by a general operational device or Turing machine assumed to proceed in detachment from or transcendence of whatever material peculiarities may pertain to its technological vehicle. That way, in short, lies the Singularity—and quite likely, were that to occur, as a connected consequence, that way lies the demise of the organic viability of the terrestrial biosphere. Do we really want to go there? Or would we rather find a way to approach what we value in the idea of intelligence in a manner that keeps it tethered to its material and organic affordances, and thus, within reach of human agency to entrain the future technosphere toward a changed but ecologically flourishing planet?

Pfeifer and Bongard reported in 2006 that the “cognitivistic paradigm” of good old-fashioned AI “is still very popular among scientists.” This residual bias toward embodiment’s dispensability, or at least, its open-ended substitutability, it seems to me, still captures the contemporary popular understanding or general stereotype of AI. A monolithic AI tends to blackbox the feedback loops or non-trivial recursive functions necessary to model the circularity of cognitive or communicative processes. In the “cognitive paradigm,” moreover, the purported intelligence of computational devices rests upon near-instantaneous serial calculations upon tiers of strictly-coded representations. AI does not yet purport to handle fluctuating, unpredictable, or self-referential internal states—that is, the full duress of actual psychic systems placed in social situations—but rather, pre-curated semantic or mathematical signs modeling combinations of external objects and events. Roli, Jaeger, and Kauffman concur that since “an AI agent is an input–output processing device,” there is, as advertised, a “fundamental limit” on how much such a machine can perform:

although not always explicitly stated, it is generally assumed that input-output processing is performed by some sort of algorithm that can be implemented on a universal Turing machine. The problem is that such algorithmic systems have no freedom from immediacy, since all their outputs are determined entirely—even though often in intricate and probabilistic ways—by the inputs of the system. There are no actions that emanate from the historicity of internal organization. There is, therefore, no agency at all in an AI “agent.”

At this point, let us take a sharp turn toward Margulis and Lovelock and ask how the cybernetic history of Gaia theory might help to reframe these interrogations of intelligence. In their 2022 essay published in the International Journal of Astrobiology, “Intelligence as a Planetary Scale Process,” planetary scientist David Grinspoon joins with astrophysicist Adam Frank and astrobiologist Sara Walker to contemplate within the common nexus of the Gaia theory of Lovelock and Margulis the emergence of effective planetary management as an index of a kind of collective intelligence operating at a global scale. Information theories developed in the quest for alien technosignatures mingle with some of the systems discourses we are going to approach in this Intensive—the cognitive biology of autonomy in complex adaptive and autopoietic systems. Between the lines of “Intelligence as a Planetary Scale Process,” an image arises of an embodied technosphere here on Earth—that is, a technosphere operationally coupled to its geosphere and biosphere in a manner that persists through compliance with the recycling regimes already vetted by two billion years of Gaian engineering. Grinspoon, Walker, and Frank pursue a Gaian-systems approach that works to mitigate the mentalistic implications and value presumptions bound up with the concept of intelligence. For me, however, the properly Gaian formulation, preferable to planetary intelligence, is planetary cognition. I think this theoretical framing more securely embeds the technosphere within its biospheric conditions of possibility. We can look into this just a bit as we finish up.

“Intelligence as a Planetary Scale Process” exchanges the prior modern vision of human consciousness triumphant in the cosmos, pursuing the “urge to expand and control,” for a vision of planetary holarchy. To guide the Earth system and its technosphere toward a mature or long-lived formation—the signature activity to be submitted to planetary intelligence, invidious individual or tribal preferences will be transcended. Earlier SETI discourse has now been effectively tempered to a significant degree by a Gaian sensibility. The dream of universal control and of the infinite extraction of cosmological resources is over. The pressing ecological consequences of our own immature technosphere are now evident to more and more Earthlings. It is thus imperative here and now to foster a robust quest for systemic resources to nudge Earth’s technosphere toward durable processes of structural coupling and self-maintenance. In this effort, they write,

The concepts of Biosphere, Noosphere and Gaia – as developed by Vernadsky, Lovelock and Margulis – are the foundations for what follow in our argument. Taken as a whole, they represented the crucial first coherent attempts to recognize that life and its activity (including intelligence) may best be understood in their full planetary context. (50)

Moreover, in the 1980s, Margulis’s particular Gaian sensibility was also cultured in autopoietic systems theory: “We note that autopoiesis figured strongly in Margulis’ exposition of the structure and function of Gaia. As she wrote ‘Living systems, from their smallest limits as bacterial cells to their largest extent as Gaia, are autopoietic: they self-maintain’” (53).

However, as they turn the idea of planetary intelligence toward the autopoietic line of thought, they set off a semantic eddy in which alternative formulations for the concept of intelligence swirl around the term cognition. Intelligence and cognition are presented as synonyms, but this usage overrides crucial distinctions. I will take a moment to draw these out. So first, the bias of intelligence is toward faculties of understanding, forethought, and choice, ranging from lesser to greater powers of application. In the Oxford English Dictionary, the first of seven distinct senses of the term intelligence is: “The faculty of understanding; intellect”; the second definition is: “Understanding as a quality admitting of degree; spec. superior understanding; quickness of mental apprehension, sagacity.” In contrast, the basic sense of cognition is the sheer capacity to apprehend or experience an environment. Reviewing the OED’s definitions of cognition, a main line of distinction between the two terms concerns the disposition of knowing. Cognition is defined first as “the action or faculty of knowing; knowledge, consciousness; acquaintance with the subject.” Its second definition homes in on the sensory-perceptual core of the concept: “the action or faculty of knowing taken in its widest sense, including sensation, perception, conception, etc. as distinguished from feeling and volition; Also, more specifically, the action of cognizing an object in perception proper.” Thus, whereas consciousness is peripheral to the wider sense of cognition, especially in autopoietic and enactive discourse, it tends to cling to the standard sense of intelligence.

To break this down to essentials:

Cognition is knowing, intelligence is understanding.

Cognition is apprehension, intelligence is comprehension.

Moreover, from the start, the discourse of autopoiesis in Maturana and Varela associated that concept with cognition. In this milieu, cognition is given by the qualitative sensory and affective faculties of environmental registration made possible by the all-or-nothing constitutive operations of autopoietic self-production. Ezequiel A. Di Paolo offers a theoretically refined overview of cognition within this milieu of discussion in his essay “Overcoming Autopoiesis”:

For the enactive view, cognition is an ongoing and situated activity shaped by life processes, self-organization dynamics, and the experience of the animate body. This approach is based on the mutually supporting concepts of autonomy, sense-making, embodiment, emergence, and experience.

In my own writing, I have worked out a concept of planetary cognition in order to draw out the implications of Lynn Margulis’s occasional evocations on the topic of autopoietic Gaia. My main point here is simply that, in the discourse of autopoiesis toward which our astrobiologists gesture vigorously throughout their essay, the concept of intelligence is more or less beside the point! So I find it intriguing that this essay on planetary intelligence swaps in the term cognition with some consistency, as if in tacit recognition that the latter term has resources of meaning and application not immediately available in the former.

This semantic dynamic is especially at play when attention is directed to the Gaia concept as a crucial underpinning for their larger arguments. In a Gaian context, the sense of cognition hews to the nonhuman biosphere, while the sense of intelligence attaches more tightly to the human realm and its own technosphere. For instance, they write, “by defining planetary intelligence in terms of cognitive activity – i.e. in terms of knowledge that is only apparent at a global scale – we are explicitly broadening our view of technological intelligence beyond species that can reason or build tools in the traditional sense” (48). In other words, as I read this, they affirm a fundamental coupling between the human technosphere and the natural technicity of the nonhuman biosphere. In this assertion their argument indeed drives toward Margulis’s own conviction that the technosphere is not solipsistic but inextricably coupled to the biosphere as well as the geosphere. They continue: “We will examine whether it is possible to consider intelligence, or some form of cognition, operating on a planetary scale even on those worlds without a planetary-scale technological species” (48). Operating, that is, on worlds such as ours long before we human beings made our appearance. Intelligence emerges on a planet like Earth thanks to a fully mature and long-lived biosphere immunized over geological time by autopoietic Gaia, a massively dynamic foundation for the current human expansion. Planetary cognition on the Gaian model appears to be the precursor formation, a kind of test-of-concept, for a nonhuman planetary intelligence. They speculate that this approach “would require some form of collective cognition to have been a functional part of the biosphere for considerably longer than the relatively short tenure of human intelligence on Earth. If true, then the inherently global nature of the complex, networked feedbacks which occur in the biosphere may itself imply the operation of an ancestral planetary intelligence” (48). The “collective cognition” in question here implies some sort of multi- or pan-species aggregation, that is, it places a Gaian conception of cognition at the base of these planetary considerations. In my estimation, this is the intelligent way to go.

And finally, a grasp of planetary cognition on the Gaian model can also scale up our thinking about the necessity of continuous communications—such as these, I hope—that envision the production of Gaian beings and the formation and maintenance of a planetary society, a world society globally distributed in lifeform and locality but sharing a common Earth. As Bruno Latour put it in Down to Earth: Politics in the New Climate Regime, “It is not a matter of learning how to repair cognitive deficiencies, but rather of how to live in the same world.” This is the epistemological problem we face: how to construct or entrain, for myriad minds that operate in private and in ensembles that may otherwise never connect, an endangered world held sufficiently in common to enjoin and expand the fruits of shared purposes toward the task of rendering our human presence on this planet fit for the longevity we desire for our posterity.